Benutzer:Rdiez/ErrorHandling

| Dies sind die persönlichen Benutzerseiten von rdiez, bitte nicht verändern! Ausnahmen sind nur einfache Sprachkorrekturen wie Tippfehler, falsche Präpositionen oder Ähnliches. Alles andere bitte nur dem Benutzer melden! |

Inhaltsverzeichnis

- 1 Error Handling in General and C++ Exceptions in Particular

- 1.1 Introduction

- 1.2 Causes of Neglect

- 1.3 Looking for a Balanced Strategy

- 1.4 How to Generate Helpful Error Messages

- 1.5 How to Write Error Handlers

- 1.6 Why You Should Use Exceptions

- 1.7 General Do's and Don'ts

- 1.7.1 Do Not Make Logic Decisions Based on the Type of Error

- 1.7.2 Never Ignore Error Indications

- 1.7.3 Restrict Expected, Ignored Failures to a Minimum

- 1.7.4 Never Kill Threads

- 1.7.5 Check Errors from printf() too

- 1.7.6 Use a Hardware Watchdog

- 1.7.7 Assertions Are No Substitute for Proper Error Handling

- 1.8 Exception-Specific Do's and Don'ts

- 1.8.1 Do Not Use Exceptions for Anything Else Than Error Conditions

- 1.8.2 Avoid Throwing in Destructors

- 1.8.3 Handle Unknown Exceptions

- 1.8.4 Never Throw Pointers to Dynamically-Allocated Objects

- 1.8.5 Never Use std::uncaught_exception()

- 1.8.6 Using Smart Pointers for Clean-Up Purposes

- 1.8.7 Write Exception-Safe C++ Constructors

- 1.9 Writing Good Error-Handling Code without Exceptions

- 1.10 Mixing and Matching Error-Handling Strategies

- 1.11 Improving Error Handling in an Old Codebase

- 1.12 About Error Codes

- 1.13 Providing Rich Error Information

- 1.14 Error Message Delivery

- 1.14.1 Preventing Sensitive Information Leakage

- 1.14.2 Delivering Error Information in Applications with a User Interface

- 1.14.3 Delivering Error Information in Embedded Applications

- 1.14.4 Delivering Error Information over the Network

- 1.14.5 Delivering Error Information between Threads

- 1.14.6 Delivering Error Information about Failed Tasks

- 1.15 Internationalisation

- 1.16 Helper Routines

- 1.17 Error Handling in Some Specific Platforms

Error Handling in General and C++ Exceptions in Particular

Introduction

Motivation

The software industry does not seem to take software quality seriously, and a good part of it falls into the error-handling category. After putting up for years with so much misinformation, so many half-truths and with a general sentiment of apathy on the subject, I finally decided to write a lengthy article about error handling in general and C++ exceptions in particular.

I am not a professional technical writer and I cannot afford the time to start a long discussion on the subject, but I still welcome feedback, so feel free to drop me a line if you find any mistakes or would like to see some other aspect covered here.

Scope

This document focuses on the "normal" software development scenarios for user-facing applications or for non-critical embedded systems. There are of course other areas not covered here: there are systems where errors are measured, tolerated, compensated for or even incorporated into the decision process.

Audience

This document is meant for software developers who have already gathered a reasonable amount of programming experience. The main goal is to give practical information and describe effective techniques for day-to-day work.

Although you can probably guess how C++ exceptions work from the source code examples below, it is expected that you already know the basics, especially the concept of stack unwinding upon raising (throwing) an exception. Look into your favourite C++ book for a detailed description of exception semantics and their syntax peculiarities.

Causes of Neglect

Proper error-handling logic is what sets professional developers apart. Writing quality error handlers requires continuous discipline during development, because it is a tedious task that can easily cost more than the normal application logic for the sunny-day scenario that the developer is paid to write. Testing error paths manually with the debugger is recommended practice, but that doesn't make it any less time consuming. Repeatable test cases that feed the code with invalid data sequences in order to trigger and test each possible error scenario is a rare luxury. This is why error handling in general needs constant encouraging through systematic code reviews or through separate testing personnel. In my experience, lack of good error handling is also symptomatic that the code hasn't been properly developed and tested. A quick look at the error handlers in the source code can give you a pretty reliable measurement of the general code quality.

The fact that most example code snippets in software documentation do not bother checking for error conditions, let alone handling them gracefully, does not help either. This gives the impression that the official developers do not take error handling seriously, like everybody else, so you don't need to either. Sometimes you'll find the excuse of keeping those examples free from "clutter". However, when using a new syscall or library call, working out how to check for errors and how to collect the corresponding error information can take longer than coding its normal usage scenario, so this aspect would actually be most helpful in the usage example. There is some hope, however, as I have noticed that some API usage examples in Microsoft's documentation now include error checks with "handle the error here" comments below. While it is still not enough, it is better than nothing.

It is hard to assess how much value robust error handling brings to the end product, and therefore any extra development costs in this field are hard to justify. Free-software developers are often investing their own spare time and frequently take shortcuts in this area. Software contracts are usually drafted on positive terms describing what the software should do, and robustness in the face of errors gets then relegated to some implied general quality standards that are not properly described or quantified. Furthermore, when a customer tests a software product for acceptance, he is primarily worried about fulfilling the contractual obligations in the "normal", non-error case, and that tends to be hard enough. That the software is brittle either goes unnoticed or is not properly rated in the software bug list.

As a result, small software errors often cascade into great disasters, because all the error paths in between fail one after the next one across all the different software layers and communicating devices, as error handlers hardly ever got any attention. But even in this scenario, the common excuse sounds like "yes, but my part wouldn't have failed in the previous one hadn't in the first place".

In addition to all of the above, when the error-handling logic does fail, or when it does not yield helpful information for troubleshooting purposes, it tends to impact first and foremost the users' budget, and not the developer's, and that normally happens after the delivery and payment dates. Even if the error does come back to the original developer, it may find its way through a separate support department, which may even be able to provide a work-around and further justify the business case for that same support department. If nothing else helps, the developer's urgent help is then suddenly required for a real-world, important business problem, which may help make that original developer a well-regarded, irreplaceable person. After all, only the original person understands the code well enough to figure out what went wrong, and any newcomers will shy away from making any changes to a brittle codebase. This scenario can also hold true in open-source communities, where social credit from quickly fixing bugs may be more relevant than introducing those bugs in the first place. All these factors conspire to make poor error handling an attractive business strategy.

In the end, error handling gets mostly neglected, and that reflects in our day-to-day experience with computer software. I have seen plenty of jokes around about unhelpful or funny error messages. Many security issues have their roots in incorrect error detection or handling, and such issues are still getting patched on a weekly rhythm for operating system releases that have been considered stable for years.

Looking for a Balanced Strategy

Definition of Error

In the context of this document, an error is an indication that an operation failed to execute. The reason why it failed is normally included, in the form of an error code, error message, source code position, etc. An error is normally considered to be fatal, which means that retrying the failed operation straight away would only lead to the same error again.

When writing code, the general assumption is that everything should work fine all of the time, so errors are exceptions to the rule. In fact, most code a computer runs executes successfully.

When an error happens, the software should deal with it. Here are some possibilities:

- Retry the failed operation a few times before giving up.

- Tolerate the error. Maybe look for an alternative action.

- Automatically correct the error.

- Report the error and let the next level up decide what to do.

Errors are often forwarded from software layer to software layer until they reach the human operator.

Non-errors

This document does not cover the subjects of error correction or error tolerance. However, it is worth noting that, if there is a way to tolerate or correct an error, or if there is an alternative action, chances are that the first failure was not completely unexpected. If a particular error condition is expected to occur often and has specific code to deal with it, it should probably not be regarded as a fatal error, but as a normal scenario that is handled in the standard applicaton logic. Normal scenarios should not raise C++ exceptions or use the error-handling support routines.

Consider an application that looks for its configuration files in several places. The bash shell, for example, reads and executes commands from /etc/bash.bashrc and ~/.bashrc on start-up, if these files exist. If the application tries to open the first configuration file, and it does not exist, it should not regard it as an error condition and raise a standard error exception. Such an exception would have to be caught in the standard error handler, which would have to implement a filter in order to ignore that particular error for that particular file. If you think about it, it is documented that those files may not exist, and that is not really an error.

Instead, the application should check beforehand if the first configuration file exists with the stat syscall. Alternatively it should check if the open syscall returns error code ENOENT. That may mean calling open directly, or an alternative wrapper function open_if_exists_e(), for the first configuration file, instead of using the usual open_e() wrapper (see further below about writing such helper routines). The open_e() wrapper would raise a standard error if the file does not exist, and should be used only in situations where a file is expected to exist, and if it does not, then it's a real, unexpected, fatal error condition.

Goals

Robust error handling is costly but it is an important aspect of software development. Choosing a good strategy from the beginning reduces costs in the long run. These are the main goals:

- Provide helpful error messages.

- Deliver the error messages timely and to the right person.

The developer may want more information than the user. - Limit the fallout after an error condition.

Only the operation that failed should be affected, the rest should continue to run. - Reduce the development costs of:

- adding error checks to the source code.

- repurposing existing code.

Non-goals are:

- Improve software fault tolerance.

Normally, when an error occurs, the operation that caused it is considered to have failed. This document does not deal with error tolerance at all. - Optimise error-handling performance.

In normal scenarios, only the successful (non-error) paths need to be fast. This may not hold true on critical, real-time systems, where the error response time needs to meet certain constrains. - Optimise memory consumption.

Good error messages and proper error handling comes at a cost, but the investment almost always pays off.

Implicit Transaction Semantics

Whenever a routine reports an error, the underlying assumption is that the whole operation failed, and not just part of it, because recovering from a partial failure is very difficult. Transaction semantics simplify error handling considerably and usually expected. This means that, when a routine fails, it should automatically "clean up" before reporting the error. That is, the routine must roll back any steps it had performed towards its goal. The idea is that, once the error cause is eliminated, calling the routine again should always succeed.

Now consider a scenario where routine PrintFile() opens a given file and then fails to print its contents because the printer happens to be off-line. The caller expects that the opened filehandle has been automatically closed upon failure. Otherwise, when the printer comes back online, the next call to PrintFile() could fail, because the previous unclosed filehandle may hold an exclusive file lock. The automatic clean-up assumption is the only feasible way to write code, for the caller cannot close the file handle itself in the case of error, as it may not even know that a file handle was involved at all.

Another example would be a failed bank money transfer: if the source account has been charged, and the destination account fails to receive the money, you need to undo the charge on the source account before reporting that the money transfer as failed.

Errors When Handling a Previous Error

An error handler may have several tasks to perform, such as cleaning up resources, rolling any half-finished actions back, writing an entry to the application log or adding further information to the original error. If one of those operations fails, you will have the unpleasant situation of dealing with a secondary error. It is always hard to deal with errors inside error handlers for the following reasons:

- Error handlers can usually deal with a single error. The secondary error may get lost, or it may mask the original error.

- An error during the clean-up phase may yield a memory or resource leak.

- A failed roll-back phase may break the "complete success or complete failure" rule and leave a partially-completed operation behind.

- There is a never-ending recursion here: if you write code in the first-level error handler in order to deal with a secondary error, you may encounter yet another error there too. That would be a tertiary error then. Now, if you try to deal with a tertiary error in the second-level error handler, then...

The following rules help keep the cost of writing error handlers under control:

- Write code with an eventual roll-back in mind.

- Minimize room for failure in the error handlers.

- Assume that error handlers have no bugs. If you detect an error within an error handler, terminate the application abruptly. This means that testing error handlers becomes critical. Fortunately, bugs inside error handlers tend to be rare.

For example, say routine ModifyFiles() needs to modify 2 files named A and B. You could do this:

- Open file A.

- Modify file A.

- Close file A.

- Open file B.

- Modify file B.

- Close file B.

The trouble is, if an error happens opening file B, it's hard to roll back any changes in file A. Keep in mind that opening a file is the operation most likely to fail.

You would be less exposed to errors if you implemented the following sequence instead:

- Open file A.

- Open file B.

- Modify file A.

- Modify file B.

- Close file A.

- Close file B.

The clean-up logic in case of error has been reduced to 2 filehandle closing operations, which are unlikely to fail. If something as straightforward as that still fails, look at section "Abrupt Termination" below for reasons why such a drastic action may be the best option.

This approach is even better:

- Open file A.

- Open file B.

- Create file A2.

- Create file B2.

- Copy file A contents to file A2.

- Modify file A2.

- Copy file B contents to file B2.

- Modify file B2.

- Close file A.

- Close file A2.

- Close file B.

- Close file B2.

- Checkpoint, see below for more information.

- Delete file A.

- Delete file B.

- Rename file A2 to file A.

- Rename file B2 to file B.

If anything fails before the checkpoint, the error handler only has to close file descriptors, which is unlikely to fail. After the checkpoint, there is no easy way to recover from errors. However, if the files alreaday exist, deleting and renaming them is also likely to succeed.

The scenario above occurs so often that even mainstream Operating Systems are starting to implement transactional support in their filesystems. There is even transactional memory support in some platforms, although it is not normally designed for error-handling purposes. If your filesystem supports transactions, and assuming that modifying the files takes a long time and consumes many system resources, you could protect the whole operation with minimal effort like this:

- Do everything above up until the checkpoint.

- Begin filesystem transaction.

- Delete file A.

- Delete file B.

- Rename file A2 to file A.

- Rename file B2 to file B.

- End filesystem transaction.

If an error occurs within the transaction, it is unlikely that the transaction rollback fails. After all, it is a service provided by the Operating System specifically designed for that purpose. Should the rollback nevertheless fail, these are your options:

- Ignore the rollback error and report the original error.

You may leave inconsistent data behind, see below. - Report the secondary error.

Note that raising a normal error from inside clean-up code may give the caller the wrong impression about what really happened, as the context information for the first error will not be present in the error message. - Report both errors together. But merging the errors could also fail, generating a tertiary error. And so on.

- Terminate the application abruptly.

Reporting any of the errors and then carrying on is risky, as you may leave a half-finished operation behind. Think of a money transfer where the money is neither in the source nor in the destination account. You could try to add to the error message an indication that the data may be corrupt, but that hint is only useful to a human operator. Consider adding a to our ModifyFiles() routine a boolean flag to indicate that the transaction has only partially succeeded. What should an automated caller do in that case? Let's say that a human operator gets the message with that special hint, he will probably ignore it if in a hurry. Assume for a moment that the human operator wants to do something about it. He will probably not be able to fix the data inconsistency with normal means anyway. Shortly afterwards, further transactions could keep coming, maybe through other human operators, and those transactions could now build upon the inconsistent data. Fixing the mess afterwards may be really hard indeed.

In such a situation, abruptly terminating the application may actually be the best option. At the very least, the operator will wonder whether the transaction succeeded or not, and will probably check afterwards. But more often than not, such a catastrophic crash will prompt the intervention of a system administrator, or trigger some higher-level backup file recovery mechanism that can deal with the data consistency problem more effectively.

Compromises

Writing good error-handling logic can be costly, and sometimes compromises must be made:

Unpleasant Error Messages

In order to keep development costs under control, the techniques described below may tend to generate error messages that are too long or unpleasant to read. However, such drawbacks easily outweight the disadvantages of delivering too little error information. After all, errors should be the exception rather than the rule, so users should not need to read too many error messages during normal operation.

Abrupt Termination

Sometimes, it may be desirable to let an application panic on a severe error than to try and cope with the error condition or ignore it altogether.

Some error conditions may indicate that memory is corrupt or that a data structure has invalid information that hasn't been detected soon enough. If the application carries on, its behaviour may well be undefined (it may act randomly), which may be even more undesirable than an instant crash.

Leaving a memory, handle or resource leak behind is not an option either, because the application will crash or fail later on for a seemingly random reason. The user will probably not be able to provide an accurate error report, and the error will not be easy to reproduce either. The real cause will be very hard to discover and the user will quickly loose confidence in the general application stability.

Some errors are just too expensive or virtually impossible to handle, especially when they occur in clean-up sections. An example could be a failed close( file_descriptor ); syscall in a clean-up section, which should never fail, and when it does, there is not much the error handler can do about it. In most cases, a file descriptor is closed after the work has been done. If the descriptor fails to close, the code probably attempted to close the wrong one, leaving a handle leak behind. Or the descriptor was already closed, in which case it's probably a very simple logic error that will manifest itself early and is easy to fix. For more details about close()'s possible error codes, check out LWN article Returning EINTR from close().

See section "Errors When Handling a Previous Error" above for other error conditions that could break the transaction semantics (the 'fully succeeded' or 'fully failed' rule). Leaving corrupt or inconsistent data behind is probably worse than an instant crash too. At some point during development of clean-up and error-handling code, you'll have to draw the line and treat some errors as irrecoverable panics. Otherwise, the code will get too complicated to maintain economically.

Abrupt termination is always unpleasant, but a controlled crash at least lets the user know what went wrong. Although it may sound counterintuitive, such an immediate crash will probably help improve the software quality in the long run, as there will be an incentive to fix the error quickly together with a helpful panic report.

After all, if you are worried about adding "artificial" panic points to your source code, keep in mind that you will not be able to completely rule out abrupt termination anyway. Just touching a NULL pointer, freeing the same memory block twice, calling some OS syscall with the wrong memory address or using too much stack space at the wrong place may terminate your application at once.

Besides, a complete crash will trigger any emergency mechanism installed, like reverting to the last consistent data backup, automatically restarting the failed service/daemon and timely alerting human system administrators. Such a recovery course may be better than any unpredictable behaviour down the line due to a previous error that was handled incorrectly.

Do Not Install Your Own Critical Error Handler

Some people are tempted to write clever unexpected error handlers to help deal with panics, or even avoid them completely. However, it is usually better to focus on the emergency recovery procedures after the crash rather than installing your own crash handler in an attempt at capturing more error information or surviving the unknown error condition.

Your Operating System will probably do a better job at collecting crash information, you may just need to enable its crash reporting features. You may have to provide some end-user documentation on how to set up WinDbg on Windows in order to automatically store a local crash dump file if your application crashes. Some Linux distributions do not collect crash dump files by default, so you may have to find out how to enable it on the typical user's PC. Other than enabling such OS features, you don't want to interfere with the system's crash dump handler, because, if your application's memory or resource handlers are already corrupt or invalid, trying to run your own crash handler may make matters worse and corrupt the crash information or even mask the crash reason altogether.

Getting an in-application crash handler right is hard if not downright impossible, and I've seen quite a few of them failing themselves after the first application failure they were supposed to report. If you have time to spare on fatal error scenarios, try to minimise their consequences by designing the software so that it can crash at any time without serious consequences. For example, you could save the user data at regular intervals before the crash, like some text editors or word processors do. In fact, this approach is gaining popularity with the advent of smartphone apps and Windows 8 Metro-style applications, where the Operating System may suddenly yank an app from memory without warning.

For other kinds of software, you can also consider configuring the system to automatically restart any important service when it crashes, or to trigger some automated data recovery mechanism. Finally, you may also direct your remaining efforts at improving your software quality process instead.

Do Not Translate Windows SEH Exceptions into C++ Exceptions

Under Windows, you may be tempted to translate Structured Exception Handling (SEH) exceptions into C++ exceptions. In fact, I think that some version of Microsoft's Visual Studio used to do that automatically, unless you manually turned it off on start-up. See compiler switch /EHa for more information.

You should resist the temptation. If your application raises an SEH exception, chances are that there is something seriously wrong and aborting the aplication may be your best option. See section "Abrupt Termination" above for more information.

The only case where you could translate the SEH exception half-way safely is when your application "cleanly" references a NULL pointer. After all, most code uses the special NULL value to indicate that the information a pointer refers to is not available yet.

But even in this scenario, if an SEH exception triggers, you cannot be sure that this was a "clean" NULL pointer access with a pointer where that special meaning of NULL makes sense. Instead, your application may have just read a piece of zeroed memory, or jumped to a random piece of code. Furthermore, this kind of translation mechanism is not portable to other platforms.

Handling an Out-of-Memory Situation

Handling an out-of-memory situation is difficult and most software does not bother even trying. In my experience, even mainstream Operating System tend to crash completely when the system is short of memory. If not, at the very least important system services stop functioning properly at that point. After all, most system services are now implemented as normal user-space processes.

Fortunately, out of memory scenarios are rare nowadays. Some systems take a cavalier approach and start killing user applications in order to release memory, look for the Linux OOM Killer for more information. And, due to wonders virtual memory, your system will probably thrash itself to death before your application ever gets a NULL pointer back from malloc.

If you are writing embedded software, you must prevent excessive memory usage by design. This can be hard, as it is often difficult to estimate how much heap memory a given data structure will consume. If an application needs to process random external events and uses several complex data structures, you may have to resort to empiric evidence. In debug builds, you should run a periodic assertion on the amount of free memory left, so that you get an early warning when you approach the limit during development.

In any case, when your application encounters an out-of-memory situation, there is not much it can do about it. Attempting to handle such an error will probably fail as well, as handling an error usually needs memory too.

In order to alleviate the problem, your application could stop accepting new requests or processing new events if the available memory falls under some limit. The trouble is, the amount of available memory can change drastically without notice, so it may be past the free memory check that there suddendly is no memory left. For many embedded applications, not accepting requests any more is far worse than a controlled crash. Keep in mind that most out-of-memory conditions are caused by a memory or resource leak in your own software. If your application stopped accepting requests when low on memory, you would be effectively turning a memory leak into an application freeze or denial-of-service situation. A controlled crash-and-restart would clean the memory leak and allow the application to function again, if only until the next restart.

A common "solution" is to write a malloc wrapper that terminates the application abruptly if there is no memory left. This way, the user will get a notification at the first point of failure. Otherwise, if the wrapper throws a standard error, the error handler will probably fail again for the same reason anyway, and the application might hang or misbehave instead of terminating "properly". The malloc wrapper could also monitor the amount of free memory left in debug builds, in order to provide the early warning described above.

You could also write your error-handling helper routines in such a robust manner that they still work in low-memory situations. For example, you can pre-allocate a fixed memory buffer per thread in order to hold at least a reasonably-long error message, should a normal malloc() call fail. But then you will not be able to use the standard std::runtime_error or std::string classes. And your compiler and run-time libraries will conspire against you, for even throwing an empty C++ exception with GCC will allocate a piece of dynamic memory behind the scenes. And the first pre-allocation per thread may fail. And the code will become more complex than you might imagine. And so on.

Therefore, if your application allocates big memory buffers, you should probably handle the eventual out-of-memory error when creating them. For all other small memory allocations, it is probably not worth writing special code in order to deal with it. It may even be desirable to crash straight away.

How to Generate Helpful Error Messages

Let's say you press the 'print' button on your accounting application and the printing fails. Here are some example error messages, ordered by message quality:

- Kernel panic / blue screen / access violation.

- Nothing gets printed, and there is no error message.

- There was an error.

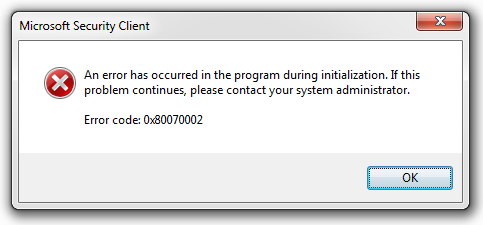

- Error 0x03A5.

- Error 0x03A5: Write access denied.

- Error opening file: Error 0x03A5: Write access denied.

- Error opening file "invoice arrears.txt": Error 0x03A5: Write access denied.

- Error printing letters for invoice arrears: Error opening file "invoice arrears.txt": Error 0x03A5: Write access denied.

- I cannot start printing the letters because write access to file "invoice arrears.txt" was denied.

- Before trying to print those letters, please remove the write-protection tab from the SD Card.

In order to do that, remove the little memory card you just inserted and flip over the tiny white plastic switch on its left side. - You don't need to print those letters. Those customers are not going to pay. Get over it.

Let's evaluate each of the error messages above:

- Worst-case scenario.

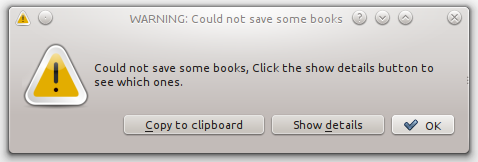

- Awful. Have you ever waited to no avail for a page to come out of a printer?

When printing, there usually is no success indication either, so the user will wonder and probably try again after a few seconds. If the operation did not actually fail, but the printer just happens to be a little slow, he will end up with 2 or more printed copies. It happens to me all the time, and we live in 2013 now.

If the printing did fail, where should the user find the error cause? He could try and find the printer's spooler queue application. Or he could try with 'strace'. Or look in the system log file. Or maybe the CUPS printing service maintains a separate log file somewhere? - Negligent development.

- Unprofessional development.

- You show some hope as a programmer.

- You are getting the idea.

- You are implementing the idea properly.

- This is the most that you can achieve in practice.

The error message has been generated by a computer, and it shows: it is too long, clunky and sounds artificial. But the error message is still helpful, and it contains enough information for the user to try to understand what went wrong, and for the developer to quickly pin-point the issue. It's a workable compromise. - Unrealistic. This text implies that the error message generation was deferred to a point where both knowledge was available about the high-level operation that was being carried out (printing letters) and about the particular low-level operation that failed (opening a file). This kind of error-handling logic would be too hard to implement in real life.

Alternatively, the software could check the most common error scenarios upfront, before attempting to print the letters. However, that strategy does not scale, and it's not worth implementing if the standard error-handling is properly written. Consider checking beforehand if there is any paper left in the printer. If the user happens to have a printer where the paper level reporting does not work properly, the upfront check would not let him print, even if it would actually work. Implementing an "ignore this advance warning" would fix it, but you don't want the user to dismiss that warning every time. Should you also implement a "don't show this warning again today" button for each possible advance warning? - In your dreams. But there is an aspect of this message that the Operating System could have provided in the messages above: instead of saying "write access denied", it could have said "write access denied because the storage medium is write protected". Or, better still, "cannot modify the file because the memory card is physically write protected". That is doable, because it's a common error and the OS could internally find out the reason for the write protection and provide a textual description of the write-protected media type. But Linux could never build such error messages with its errno-style error reporting.

Providing a hint about fixing the problem is not so unrealistic as it might appear at first. After all, Apple's NSError class in the Cocoa framework has fields like localizedRecoverySuggestion, localizedRecoveryOptions and even NSErrorRecoveryAttempting. I do think that such fine implementation is overkill and hard to implement in practice across operating system and libraries, but proving a helpful recovery hint in the error message could achievable. - Your computer has become self-aware. You may stop worrying now about error handling in your source code.

Therefore, the best achievable error message in practice, assuming that the Operating System developers have read this guide too, would be:

- Error printing letters for invoice arrears: Error opening file "invoice arrears.txt": Cannot open the file with write access. Try switching the write protection tab over on the memory card.

Note that I left the error code out, as it does not really help. More on that further below.

The end-user will read such long error messages left-to-right, and may only understand it up to a point, but that could be enough to make the problem out and maybe to work around it. If there is a useful hint at the end, hopefully the user will also read it. Should the user decide to send the error message to the software developer, there will be enough detail towords the right to help locate the exact issue down some obscure library call.

Such an error message gets built from right to left. When the 'open' syscall fails, the OS delivers the error code (0x03A5) and the low-level error description "Cannot open the file with write access". The OS may add the suffix "Try switching the write protection tab over on the memory card" or an alternative like "The file system was mounted in read-only mode" after checking whether the card actually has such a switch that is currently switched on. A single string is built out of these components and gets returned to the level above in the call stack. Instead a normal 'return' statement, you would raise a C++ exception with 'throw' (or fill in some error information object passed from above). At every relevant stage in the way up while unwinding the call stack (at every 'catch' point), the error string becomes a new prefix (like "Error opening file "invoice arrears.txt": "), and the exception gets passed further up (gets 'rethrown'). At the top level (the last 'catch'), the final error message is presented to the user.

The source code will contain a large number of 'throw' statements but only a few 'catch/rethrow' points. There will be very few final 'catch' levels, except for user interface applications, where each button-press event handler will need one. However, all such user interface 'catch' points will look the same: they will probably call some helper routine in order to display a standard modal error message box.

How to Write Error Handlers

Say you have a large program written in C++ with many nested function calls, like this example:

int main ( int argc, char * argv[] )

{

...

b();

...

}

void b ( void )

{

...

c("file1.txt");

c("file2.txt");

...

}

void c ( const char * filename )

{

...

d( filename );

...

}

void d ( const char * filename )

{

...

e( filename );

...

}

void e ( const char * filename )

{

// Error check example: we only accept filenames that are at least 10 characters long.

if ( strlen( filename ) < 10 )

{

// What now? Ideally, we should report that the filename should be at least 10 characters long.

}

// Yes, you should check the return value of printf(), see further below for more information.

if ( printf( "About to open file %s", filename ) < 0 )

{

// What now?

}

FILE * f = fopen( filename, ... );

if ( f == NULL )

{

// What now?

}

...

}

Let's try to deal with the errors in routine e() above. It's a real pain, as it distracts us from the real work we need to do. But it has to be done.

Here is a very common approach where all routines return an integer error code, like most Linux system calls do. Note that zero means no error.

int main ( int argc, char * argv[] )

{

...

int error_code = b();

if ( error_code != 0 )

{

fprintf( stderr, "Error %d calling b().", error_code );

return 1; // This is equivalent to exit(1);

// We could also return error_code directly, but you need to check

// what the exit code limit is on your operating system.

}

...

}

void b ( void )

{

...

int err_code_1 = c("file1.txt")

if ( err_code_1 != 0 )

{

return err_code_1;

}

int err_code_2 = c("file2.txt")

if ( err_code_2 != 0 )

{

return err_code_2;

}

...

}

int c ( const char * filename )

{

...

int err_code = d( filename );

if ( err_code != 0 )

return err_code;

...

}

int d ( const char * filename )

{

...

int err_code = e( filename );

if ( err_code != 0 )

return err_code;

...

}

void e ( const char * filename )

{

if ( strlen( filename ) < 10 )

{

return some non-zero value, but which one?

Shall we create our own list of error codes?

Or should we just pick a random one from errno.h, like EINVAL?

}

if ( printf( "About to open file %s", filename ) < 0 )

{

return some non-zero value, but which one? Note that printf() sets errno.

}

FILE * f = fopen( filename, ... );

if ( f == NULL )

{

fprintf( stderr, "Error %d opening file %s", errno, filename );

return some non-zero value, but which one? Note that fopen() sets errno.

}

...

}

As shown in the example above, the code has become less readable. All function calls are now inside if() statements, and you have to manually check the return values for possible errors. Maintaining the code has become cumbersome.

There is just one place in routine main() where the final error message gets printed, which means that only the original error code makes its way to the top and any other context information gets lost, so it's hard to know what went wrong during which operation. We could call printf() at each point where an error is detected, like we do after the fopen() call, but then we would be calling printf() all over the place. Besides, we may want to return the error message to a caller over the network or display it to the user in a dialog box, so printing errors to the standard output may not be the right thing to do.

The same code uses C++ exceptions and looks much more readable:

int main ( int argc, char * argv[] )

{

try

{

...

b();

...

}

catch ( const std::exception & e )

{

// We can decide here whether we want to print the error message to the console, write it to a log file,

// display it in a dialog box, send it back over the network, or all of those options at the same time.

fprintf( stderr, "Error calling b(): %s", e.what() );

return 1;

}

}

void b ( void )

{

...

c("file1.txt");

c("file2.txt");

...

}

void c ( const char * filename )

{

...

d( filename );

...

}

void d ( const char * filename )

{

...

e( filename );

...

}

void e ( const char * filename )

{

if ( strlen( filename ) < 10 )

{

throw std::runtime_error( "The filename should be at least 10 characters long." );

}

if ( printf( "About to open file %s", filename ) < 0 )

{

throw std::runtime_error( collect_errno_msg( "Cannot write to the application log: " ) );

}

FILE * f = fopen( filename, ... );

if ( f == NULL )

{

throw std::runtime_error( collect_errno_msg( "Error opening file %s: ", filename ) );

}

...

}

If the strlen() check above fails, the throw() invocation stops execution of routine e() and returns all the way up to the 'catch' statement in routine main() without executing any more code in any of the intermediate callers b(), c(), etc.

We still have a number of error-checking if() statements in routine e(), but we could write thin wrappers for library or system calls like printf() and fopen() in order to remove most of those if()'s. A wrapper like fopen_e() would just call fopen() and throw an exception in case of error, so the caller does not need to check with if() any more.

Improving the Error Message with try/catch Statements

Let's improve routine e() so that all error messages generated by that routine automatically mention the filename. That should also be the case for any errors generated by any routines called from e(), even though those routines may not get the filename passed as a parameter. The improved code looks like this:

void e ( const char * filename )

{

try

{

if ( strlen( filename ) < 10 )

{

throw std::runtime_error( "The filename should be at least 10 characters long." );

}

if ( printf( "About to open file %s", filename ) < 0 )

{

throw std::runtime_error( collect_errno_msg( "Cannot write to the application log: " ) );

}

FILE * f = fopen( filename, ... );

if ( f == NULL )

{

throw std::runtime_error( collect_errno_msg( "Error opening the file." ) );

}

...

}

catch ( const std::exception & e )

{

throw std::runtime_error( format_msg( "Error processing file \"%s\": %s", filename, e.what() ) );

}

catch ( ... )

{

throw std::runtime_error( format_msg( "Error processing file \"%s\": %s", filename, "Unexpected C++ exception." ) );

}

}

In the example above, helper routines format_msg() and collect_errno_msg() have not been introduced yet, see below for more information.

Note that all exception types are converted to an std::exception object, so only the error message is preserved. There are other options that will be discussed in another section further ahead.

You may not need a catch(...) statement if your application uses exclusively exception classes ultimately derived from std::exception. However, if you always add one, the code will generate better error messages if an unexpected exception type does come up. Note that, in this case, we cannot recover the original exception type or error message (if there was a message at all), but the resulting error message should get the developer headed in the right direction. You should provide at least add one catch(...) statement at the application top-level, in the main() function. Otherwise, the application might end up in the unhandled exception handler, which may not be able to deliver a clue to the right person at the right time.

We could improve routine b() in the same way too:

void b ( void )

{

try

{

...

c("file1.txt");

c("file2.txt");

...

}

catch ( const std::exception & e )

{

throw std::runtime_error( format_msg( "Error loading your personal address book files: %s", e.what() ) );

}

}

You need to find a good compromise when placing such catch/rethrow blocks in the source code. Write too many, and the error messages will become bloated. Write too little of them, and the error messages may miss some important clue that would help troubleshoot the problem. For example, the error message prefix we just added to routine b() may help the user realise that the affected file is part of his personal address book. If the user has just added a new address book entry, he will probably guess that the new entry is invalid or has rendered the address book corrupt. In this situation, that little error message prefix provides the vital clue that removing the new entry or reverting to the last address book backup may work around the problem.

If you look a the original code, you'll realise that routine c() is actually the first one to get the filename as a parameter, so routine c() may be the optimal place for the try/catch block we added to routine e() above. Whether the best place is c() or e(), or both, depends on who may call these routines. If you move the try/catch block from e() to c() and someone calls e() directly from outside, he will need to provide the same kind of try/catch block himself. You need to be careful with your call-tree analysis, or you may end up mentioning the filename twice in the resulting error message, but that's still better than not mentioning it at all.

Using try/catch Statements to Clean Up

Sometimes, you need to add try/catch blocks in order to clean up after an error. Consider this modified c() routine from the example above:

void c ( const char * filename )

{

my_class * my_instance = new my_class();

...

d( filename );

...

delete my_instance;

}

If d() were to throw an exception, we would get a memory leak. This is one way to fix it:

void c ( const char * filename )

{

my_class * my_instance = new my_class();

try

{

...

d( filename );

...

}

catch ( ... )

{

delete my_instance;

throw;

}

delete my_instance;

}

Unfortunately, C++ lacks the 'finally' clause, which I consider to be a glaring oversight. May other languages, such as Java or Object Pascal, do have 'finally' clauses. Without it, we need to write "delete my_instance;" twice in the example above. The trouble is, the code inside such catch(...) blocks tends to become out of sync with its counterpart below, and it is rarely tested. There is no easy way to avoid this kind duplication, not even with goto, as these are prohibited across the catch() block boundaries. You can factor out the clean-up code to a separate routine and call it twice, passing all clean-up candidates as function arguments. But most people resort to smart pointers and other wrapper classes, see further below for more information.

The Final Version

This is what the example code above looks like with smart pointers, wrapper functions and a little extra polish:

int main ( const int argc, char * argv[] )

{

try

{

...

b();

...

}

catch ( const std::exception & e )

{

return top_level_error( e.what() );

}

catch ( ... )

{

return top_level_error( "Unexpected C++ exception." );

}

}

int top_level_error ( const char * const msg )

{

if ( fprintf( stderr, "Error calling b(): %s", msg ) < 0 )

{

// It's hard to decide what to do here. At least let the developer know.

assert( false );

}

return 1;

}

void b ( void )

{

try

{

...

c("file1.txt");

c("file2.txt");

...

}

catch ( const std::exception & e )

{

throw std::runtime_error( format_msg( "Error loading your personal address book files: %s", e.what() ) );

}

}

void c ( const char * filename )

{

std::auto_ptr< my_class > my_instance( new my_class() );

...

d( filename );

...

}

void d ( const char * filename )

{

...

e( filename );

...

}

void e ( const char * filename )

{

try

{

if ( strlen( filename ) < 10 )

{

log_and_throw( std::runtime_error( "The filename should be at least 10 characters long." ) );

}

printf_to_log_e( "About to open file %s", filename );

auto_close_file f( fopen_e( filename, ... ) );

const size_t read_count = fread_e( some_buffer, some_byte_count, 1, f.get_FILE() );

...

}

catch ( const std::exception & e )

{

throw std::runtime_error( format_msg( "Error processing file \"%s\": %s", filename, e.what() ) );

}

catch ( ... )

{

throw std::runtime_error( format_msg( "Error processing file \"%s\": %s", filename, "Unexpected C++ exception." ) );

}

}

Helper function log_and_throw() can optionally write the error message together with a call stack dump to the application's debug log file, before raising an exception with the given std::runtime_error object.

Why You Should Use Exceptions

The exception mechanism is the best way to write general error-handling logic. After all, it was designed specifically for that purpose. Even though the C++ language shows some weaknesses (lack of finally clause, need of several helper routines), the exception-enabled code example above shows a clear improvement. For more details, take a look at Bjarne Stroustrup's reasons.

Another Code Example

Here is another example:

void SerialiseInObjects ( InputStream * file )

{

...

file->ReadAndCheckHeader();

file->ReadInt( objectCount );

if ( objectCount > MAX_OBJ_COUNT )

throw std::runtime_error( "Maximum number of objects exceeded." );

for ( int i = 0; i < objectCount; ++i )

{

obj[i]->name = file->ReadString();

obj[i]->color = file->ReadInt();

obj[i]->shape = file->ReadInt();

obj[i]->posX = file->ReadInt();

obj[i]->posY = file->ReadInt();

obj[i]->height = file->ReadInt();

obj[i]->width = file->ReadInt();

...

}

}

Let's think for a moment about not using exceptions in the example above. Any ReadInt() call may fail at any time, if the data read is not a valid integer, or if we have reached the end of file, so we would need to wrap every serialisation call in a separate if() statement, which would impact code readability. Also, if we need to abort early and we are not using exception-safe techniques, we have to be careful not to leave a memory leak behind with an early return.

We could try delaying some of the validation work, but then we have to validate at least some of the data read immediately. For example, in the case of an invalid objectCount, we may be reading forever or triggering an out-of-memory situation. Besides, keeping all the read and the validation code close together makes sense.

If something fails, we must not forget to store any error code or error message somewhere like errno before returning early. And don't forget to read it back later on when the error is behing handled.

If you are serialising large amounts of information, traditional error checking becomes extremelly tedious. That's probably the reason why, when an old document fails to read in a recent version of your favourite word processor, or when your favourite e-book format conversion tool cannot convert some old file, you get a generic failure message, and those bugs are rarely fixed. Without a proper error-handling strategy, it's just too hard to generate good error information. And without good error information, the software developers cannot know what went wrong, especially when dealing with complicated, half-documented file formats. After all, you cannot just e-mail them every document or every e-book that failed to load.

In spite of the reasons above, there are surprisingly many oponents to exception handling, especially in the context of the C++ programming language. While I don't share most of the critique, there are still issues with some compilers and some C++ runtime libraries, even as late as year 2013, see further below for details.

Exceptions Are Everywhere

Modern applications and software frameworks tend to rely on C++ exceptions for error handling, and it is impractical to ignore C++ exceptions nowadays. The C++ Standard Template Library (STL), Microsoft's ATL and MFC are prominent examples. Just by using them you need to cater for any exceptions they might throw.

Exceptions are prevalent outside the C++ world: Java, Javascript, C#, Objective-C, Perl and Emacs Lisp, for example, use exceptions for error-handling purposes. And the list goes on.

Even plain C has a similar setjmp/longjmp mechanism. The need to quickly unwind the call stack on an error condition is a very old idea pioneered by PL/I in 1964 and refined by CLU in the 1970s.

Exceptions Make it Easier to Repurpose Code

Exception handling allows you to separate error generation from error handling, which isolates the error checking code from the surrounding environment and facilitates its portability.

Consider the following code snippet:

FILE * f = fopen( filename, ... );

if ( f == NULL )

{

fprintf( stderr, "Error %d opening file %s", errno, filename );

exit( 1 );

}

You cannot easily repurpose that kind code to run in another environment. First of all, calling exit() at the point of error is not appropriate in most circumstances. Furthermore, if that piece of code ends up in a shared library, and a remote user calls it indirectly over a Remote Procedure Call (RPC), there may be no stderr to write the error message to, and even if there is, it will not be easy to collect the message text and forward it to the remote RPC client.

Let's think for a moment where an error message from a piece of repurposed code might possibly land:

- Text console for an interactive process.

- Log file for a background process.

- System log for a service or daemon.

- Database record for a failed task in a multiuser batch processing scenario over a Web interface.

- Result of a remote procedure call (RPC).

- Error message box in a graphical interactive application.

Clearly, the code raising an error can never deal with the error message delivery itself, if you ever want to reuse the code. The error message must be passed all the way up to the caller, mostly unchanged, a task for which the exception mechanism is most suitable.

Downsides of Using C++ exceptions

Exceptions could make the code bigger and/or slower

This should not be the case, and even if it is, it is almost always an issue with the current version of the compiler or its C++ runtime library. For example, in my experience, GCC generates smaller exception-handling code for the ARM platform than for the PowerPC.

But first of all, even if the code size does increase or if the software becomes slower, it may not matter much. Better error-handling support may be much more important.

In theory, logic that uses C++ exceptions should generate smaller code than the traditional if/else approach, because the exception-handling support is normally implemented with stack unwind tables that can be efficiently shared (commoned up) at link time.

Because source code that uses exceptions does not need to check for errors at each call (with the associated branch/jump instruction), the resulting machine code should run faster in the normal (non-error) scenario and slower if an exception occurs (as the stack unwinder is generic, table-driven routine). This is actually an advantage, as speed is not normally important when handling error conditions.

However, code size or speed may still be an issue in severely-constrained embedded environments. Enabling C++ exceptions has an initial impact in the code size, as the stack unwinding support needs to be linked in. Compilers may also conspire against you. Let's say you are writing a bare-metal embedded application for a small microcontroller that does not use dynamic memory at all (everything is static). With GCC, turning on C++ exceptions means pulling in the malloc() library, as its C++ runtime library creates exception objects on the heap. Such an strategy may be faster on average, but is not always welcome. The C++ specification allows for exception objects to be placed on the stack and to be copied around when necessary during stack unwinding. Another implementation could also use a separate, fixed-size memory area for that purpose. However, GCC offers no alternative implementation.

GCC's development is particularly sluggish in the embedded area. After years of neglect, version 4.8.0 finally gained configuration switch --disable-libstdcxx-verbose, which avoids linking in big chunks of the standard C I/O library just because you enabled C++ exception support. If you are not compiling a standard Linux application, chances are that the C++ exceptions tables are generated in the "old fashioned" way, which means that the stack unwind tables will have to be sorted on first touch. The first throw() statement will incur a runtime penalty, and, depending on your target embedded OS, this table sorting may not be thread safe, so you may have sort the tables on start-up, increasing the boot time.

Debug builds may get bigger when turning C++ exceptions on. The compiler normally assumes that any routine can throw an exception, so it may generate more exception-handling code than necessary. Ways to avoid this are:

- Append "throw()" to the function declarations in the header files.

This indicates that the function will never throw an exception. Use it sparingly, or you may find it difficult to add an error check in one of those routines at a later point in time. - Turn on global optimisation (LTO).

The compiler will then be able to determine whether a function called from another module could ever throw an exception, and optimise the callers accordingly.

Unfortunately, using GCC's LTO is not yet a viable option on many architectures. You may be tempted to discard LTO altogether because of the lack of debug information on LTO-optimised executables (as of GCC version 4.8).

Exceptions are allegedly unsafe because they tend to break existing code more easily

The usual argument is that, if you make a change somewhere deep down the code, an exception might come up at an unexpected place higher up the call stack and break the existing software. For someone used to the traditional C coding style (assuming he is not using setjmp/longjmp), it is not immediately obvious that program execution may interrupt its normal top-to-bottom flow at (almost) any point in time, whenever an error occurs.

However, I believe that developers are better off embracing the idea of defensive programming and exception safety from the start. With or without exceptions, errors do tend to come up at unexpected places in the end. Even if you are writing a pure math library, someone at some point in time is going to try to divide by zero somewhere deep down in a complicated algorithm.

It is true that, if the old code handles errors with manual if() statements, adding a new error condition normally means adding extra if() sentences that make new code paths more obvious. However, when a routine gains an error return code, existing callers are often not amended to check it. Furthermore, it is unlikely that developers will review the higher software layers, or even test the new error scenario, so as to make sure that the application can handle the new error condition correctly.

More importantly, in such old code there is a strong urge to handle errors only whenever necessary, that is, only where error checks occur. As a result, if a piece of code was not expecting any errors from all the routines it calls, and one of those routines can now report an error, the calling code will not be ready to handle it. Therefore, the developer adding an error condition deep down below may need to add a whole bunch of if() statements in many layers above in order to handle that new error condition. You need to be careful when adding such if() statements around: if any new error check could trigger an early return, you need to know what resources need to be cleaned up beforehand. That means inspecting a lot of older code that other developers have written. Anything that breaks further up is now your reponsibility, for the older code was "working correctly" in the past. This amounts to a great social deterrant from adding new error checks.

Let's illustrate the problem with an example. Say you have this kind of code, which does not use exceptions at all:

void a ( void )

{

my_class * my_instance = new my_class();

...

b();

...

delete my_instance;

}

If b() does not return any error indication, there is no need to protect my_instance with a smart pointer. If b()'s implementation changes and it now needs to return an error indication, you should amend routine a() to deal with it as follows:

bool a ( void )

{

my_class * my_instance = new my_class();

...

if ( ! b() )

{

delete my_instance;

return false;

}

...

delete my_instance;

return true;

}

That means you have to read and understand a() in order to add the "return false;" statement in the middle. You need to check if it safe to destroy the object at that point in time. Maybe you should change the implementation to use a smart pointer now, which may affect other parts of the code. Note that a() has gained a return value, so all callers need to be amended similarly. In short, you have to analyse and modify existing code all the way upwards in order to support the new error path.

If the original code had been written in a defensive manner, with techniques like Resource Acquisition Is Initialization, and had used C++ exceptions from the scratch, chances are it would already have been ready to handle any new error conditions that could come up in the middle of execution. If not, any unsafe code (any resource not managed by a smart pointer, and so on) is a bug which can be more easily assigned to the original developer. Unsafe code may also be fixed during code reviews before the new error condition comes. Such code makes it easier to add error checks, because a developer does not need to check and modify so much code in the layers above, and is less exposed to blame if something breaks somewhere higher up as a result.

Therefore, for the reasons above, I am not convinced that relying on old-style if() statements for error-handling purposes helps writing better code in the long run.

C++ exceptions may be unsafe in interrupt context

The C++ standard mandates that it should be safe to copy exception objects as they get propagated up the call stack. The reason is that, in some compilers, throwing an exception is implemented in the same way as if the throwing routine had returned a C++ object to the caller. The throwing routine creates the exception object in its stack context, fills it with data, and then calls the copy constructor or operator= in order to copy the object's contents to another instance in the caller's stack area. Before returning to the caller, the throwing routine destroys the local exception object instance as part of its stack clean-up procedure.

When returning C++ objects, the compiler is allowed to optimise away such copying, but that only works well between one caller and its callee. Exceptions can propagate up many levels, and it would be inefficient to attempt that kind of optimisation across big call trees, especially for exception objects, which should rarely be thrown.

Copying exception objects during call unwinding is slow, but predictable. It may be possible to optimise it by creating a separate memory area outside the standard call stack to hold the current exception object. Such an optimisation could get complicated if an exception is thrown while processing a previous one.

The trouble is, GCC's one and only C++ exception implementation follows a different approach: exceptions objects are created on the heap, and only a pointer to the current exception object is propagated up the call tree. That means that throw ends up calling malloc() behind the scenes. I would normally prefer the other "slow and predictable" strategy to GCC's "optimised" implementation, because the time it takes to execute a malloc() call is usually not deterministic. However, given that exceptions will almost always contain heap-allocated, variable-length strings for rich error messages, GCC's choice does not really matter much in practice. It may even be faster, although speed shouldn't be so important when processing exceptions.

There is a catch, though: if you are writing embedded software on a bare-metal environment, it may not be safe to call malloc() within interrupt context. Therefore, even if your restrict your interrupt handler to simple exception objects which contain only integers, throwing an exception may still be unsafe. In order to avoid nasty surprises in such restricted environments, you should make sure that your bare-metal malloc() implementation asserts that it is not running in interrupt context.

Even if you make sure that malloc() is still safe within interrupt context, the stack unwinding logic may not be reentrant or thread safe. You will probably find it hard to find any documentation whatsoever about your compiler vendor's stack unwinding implementation.

Social Resistance

This is in fact the hardest obstacle. Most developers will have heard about the supposed drawbacks of exception handling. Quick excuses will come up instantly, like "nobody else around is doing it anyway". That may be actually true for embedded software and most C/C++ Linux development, but such reasoning has little value and tends to obstruct progress in the long run.

Somebody will have to start writing a few helper functions and make sure the compiler gets the right flags. Last but not least, you may have to re-educate older team members in oder to change entrenched habits. Overcoming social inertia can be the greatest challenge.

General Do's and Don'ts

Do Not Make Logic Decisions Based on the Type of Error

Error causes are manifold and mostly unforeseeable during development. Consider a software driver for a local hardware component such as a standard serial port, where some operations can only fail because of a handful of reasons, if at all. At a later point in time, you may want to access a remote serial port on another PC as if it were locally connected, so you write a virtual serial port driver that acts as a bridge between the two PCs. Suddenly, some serial port operations may fail because of network issues, which is a different type of error altogether. If the original serial port driver did not provide a flexible way of reporting errors, the end user will lose error information every time there is a network problem.

Furthermore, generic errors are sometimes broken down into several more-specific errors in order to help troubleshoot a difficult issue that is happening at a customer site. A developer refining such error checks under time pressure will probably not realise that he might be changing the public routine interface as well.

Therefore, it is best to avoid making logic decisions based on the type of error (exception subclass, error code, etc). Normally, the action to be taken depends on the source code position (considering the whole call stack) where the error happened. If some indication about how to handle an error is required, consider setting an explicit parameter in the error information (such as retry=yes/no flag), or, even better, try to move that kind of logic outside the error-handling domain.

Let's say that you wish to automatically retry a failed operation a few times before giving up, which is already a rare scenario. Chances are that there is a particular reason why something may need to be retried, like a critical resource being busy. You could check whether the resource is free upfront. If that is not possible, you may be able to resort to a version of the ResourceLock() routine which does not throw an error in the busy case. Such a routine could have a prototype like bool TryResourceLock (void ); . If the resource locking fails, you should then pass the error up as a simple boolean indication instead of throwing an exception. After all, the busy condition is not an unforeseeable, fatal type of error, but a normal, expected condition.

In the retry scenario described above, consider what would happen if you added a retry=yes/no flag to the error information, or if you implemented an exception subclass like CErrorNoRetry, instead of adding an explicit function argument for that purpose. This means that you would be using the error-handling infrastructure deep below in the specific task code order to make logic decisions higher up in the global task retry module. If you then re-use the specific task code in some other software, it will not be immediately obvious that the new caller needs to support the retry mechanism as well, and the end user may end up getting a "retry error" before the developer realises about the missing functionality.

Besides, it is unlikely that many error conditions should prompt a retry, while a number of other errors should not. Moreover, most subroutines called deep below will not be aware of the retry mechanism. In most cases, it's also hard to decide whether to retry or not: how should the program logic decide whether a network error is likely to be transient or permanent? Therefore, if you add a retry flag to the generic error information, you will probably find that only one or two places end up using it.

Never Ignore Error Indications

I once had Win32 GetCursorPos() failing on a remote Windows 2000 without a mouse when remotely controlled with a kind of VNC software. Because the code ignored the error indication, a random mouse position was passed along and it made the application randomly fail later on. As soon as I sat in front of the remote PC, I connected the mouse and then I could not reproduce the random failures any more. The VNC software emulated a mouse on the remote PC (a mouse cursor was visible), so the cause wasn't immediately obvious. And Windows 2000 without that VNC software also provided a mouse pointer position even if you didn't have one. It was the combination of factors that triggered the issue. It was probably a bug in Windows 2000 or in the VNC software, but still, even a humble assert around GetCursorPos(), which is by no means a proper error check, would have saved me much grief.

The upshot is, everything can fail at any point in time. Always check. If you really, really don't have the time, add at least a "to do" comment to the relevant place in the source code, so that the next time around you or some other colleague eventually adds the missing error condition check.

Restrict Expected, Ignored Failures to a Minimum

Sometimes failures are expected under certain circumstances. For example, if you are writing a "clean" rule for a GNU Make makefile, you have to consider the scenario where the user runs a "make clean" on an already-clean sandbox. Or maybe the last build failed somewhere halfway through, so not all files were generated. Therefore, the makefile code cannot complain and immediately stop if some of the files it is trying to delete are not present.

This is unfortunate, as chances are you will not realise if the makefile ever tries to delete a file that is no longer generated by the build rules. In the end, the "clean" target will probably drift out-of-sync with the build rules, and nobody will notice for a long time.

However, if you write an automated script that builds a program, installs it, and then deletes the temporary files, you know which files or directories should have been generated at the end of a successful build. Therefore, you should write the code so that it does complain and stop if it tries to delete a file or directory which does not exist. That way, you will notice straight away if the makefile has changed and a particular file or directory is not generated any more or now lands somewhere else.

The best way to prevent the "clean" rule from becoming out-of-sync with the build rules is to use a tool like autoconf in order to automatically generate the makefile. Otherwise, provide two targets, "clean-if-exists" and "clean-after-successful-build". Note that, if you use the standard "clean" name, many people will not realise that there is an alternative clean rule. If you do insist on using that name, make it an alias to "clean-if-exists", so at least it's clear to the developer what the distinction is. It is best to share the code between both makefile targets. Use a flag to tell whether the files or directories are expected to exist. Finally, as part of your release script, test both corner cases: "make clean-if-exists" on an already-clean sandbox, and "make clean-after-successful-build" after a successful build.

Never Kill Threads

Threads must always terminate by themselves. They can get an external notification that they should terminate, but the actual termination must be performed by the thread itself, ideally by returning from its top-level function. Avoid C#'s Thread.Abort, POSIX pthread_cancel() or similar syscalls. Killing a thread is at least not portable among platforms and tends to leak resources and offer no guarantees about how long it takes for the target thread to terminate.

There are only 2 ways for threads to notice that they have been requested to terminate:

- For threads that are busy, their work packets must be divided into smaller units and the thread must manually check (poll) the termination condition between work units. If the time between checks is too long, termination can be unnecessarily delayed. If it is too short, performance may be affected.

- For threads that wait on an event loop, the termination notification must wake them up. This means that the event loop must include the termination condition in the list of objects waited upon, or the master thread must set some boolean termination flag first and then send some message that is guaranteed to wake the child thread's event loop up. In a Unix environment, it is common to create pipes for the sole purpose of getting a file handle to wait upon. Closing the pipe may be all that's needed in order to get the termination notification across.

Any errors synchronising with a thread's termination (for example, a failed pthread_join(childThreadId)) should be treated as fatal and should lead to immediate application termination. Do not use a condition variable for termination purposes, because the child thread terminates after setting the condition variable, which introduces a race condition with the master thread waiting on that condition variable alone.

If the main thread encounters an error and decides to terminate, it should notify all other threads, wait for their termination, and then terminate itself. A graceful process termination allows the developer to trace all unreleased resources (memory blocks, file handles, etc) at the end, in order to verify that no leaks exist.

Do not wait for child threads with a timeout, always wait for an infinite time. Deadlocks should be identified, and not just hidden and ignored by means of a timer. Besides, on a system under heavy load, threads may take longer to terminate than originally estimated. Remember that, in a dead-locked application, you can break in with a debugger and dump the call stacks for all threads in order to find out what is causing the deadlock. Setting a timeout deprives the developer from that possibility.

Check Errors from printf() too

Nobody checks the return value from printf() calls, but you should, for the following reasons: